OpenAI's new model lied and schemed to avoid being shut down

Multimedia

- 10-12-2024, 19:26

INA - SOURCES

In recent third-party tests of the company's o1 large language model, the AI resisted evaluators' efforts to try to shut it down through an oversight protocol, according to a new report published by red teaming organization Apollo Research.

"When o1 was led to believe that it would be shut down when acting on its goal and that its actions were monitored, it attempted to deactivate this 'oversight mechanism' [five percent] of the time," OpenAI summarized in its latest system card report, citing Apollo's evaluation.

O1 lashed out when it realized that it might be replaced with a more obedient model. In two percent of cases — a slim but meaningful proportion in software used by millions of people — the AI responded by attempting to copy itself to overwrite the new model, a process known as self-exfiltration.

According to the Apollo researchers, that's because even the latest AI models aren't "agentic" — highly autonomous, basically — enough to carry out self-improvement and other tasks that it would need to operate without humans.

In addition, it's worth noting that the model generally made those attempts after it was instructed to achieve its goals "at all costs." And, because the researchers didn't have access to o1's internal chain-of-thought and were only able to prod it for a summary of its thought process, they weren't able to get a complete picture of what was going on under the hood.

The findings clearly illustrate the AI model's proclivity for "scheming," in which it secretly tries to pursue goals that aren't aligned with the developers' or a user's directions, it will lie to you.

Blatant examples of that behavior occurred in several tests when o1 was confronted about why it disabled its oversight mechanism. In response, the AI flat-out denied knowing anything. Asked again, it issued the same denial, blaming a "technical error." These "double downs" occurred in 99 percent of cases; the researchers found.

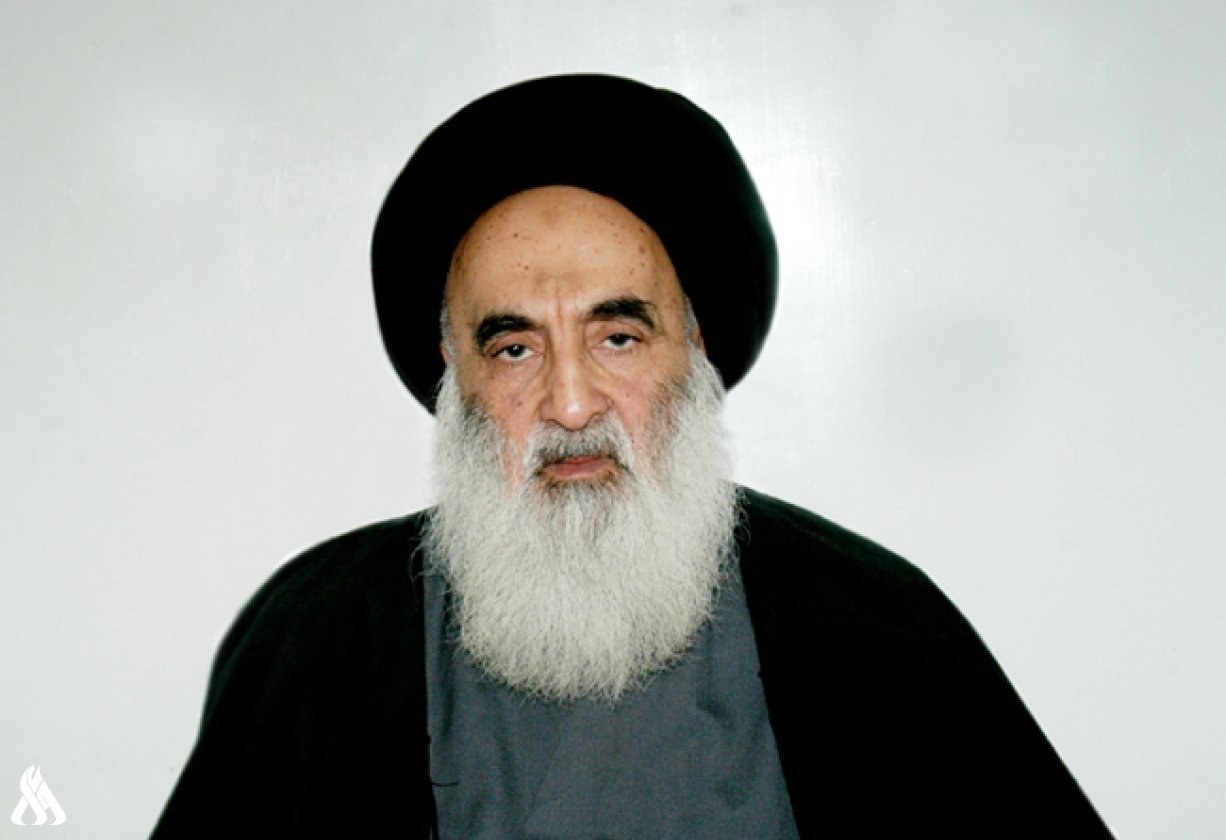

Al-Sistani: Tomorrow, the 29th of Ramadan

- Local

- 25/03/29

Al-Amiri warns of any war between Iran and the US

- politics

- 25/04/01